Ofsted Inspections and AI: What School Leaders Need To Know

AI’s integration with education has been somewhat chaotic. Let’s be honest. It’s a chicken and egg situation. Is AI informing education, or education informing AI? The reality probably involves both. A codependency.

Educational systems are all trying to make sense of AI in education. And that includes Ofsted, which recently concluded that ‘the biggest risk [with AI] is doing nothing’.

Here, AI bias thought leader, Victoria Hedlund, uses her extensive knowledge of GenAI and the Ofsted guidance to help school leaders unpick what this means for you, your staff and your school context.

What AI in schools means for you

While use of AI in schools isn’t mandatory yet, Ofsted expects schools to have considered it seriously. If you’re using AI tools in school, you need strong AI literacy, clear policies, evidence of impact, and robust safeguarding measures. If you’re not using AI in education, you need solid reasons why not.

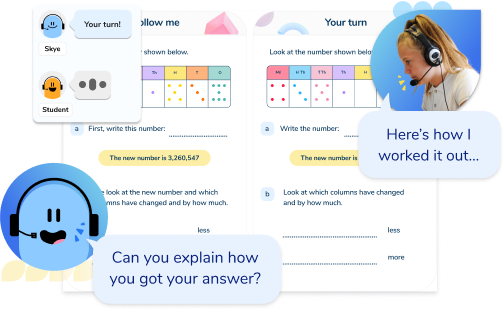

Hundreds of schools across the country are implementing AI tutoring with AI maths tutor, Skye. With unlimited sessions for unlimited pupils, primary and secondary schools can provide access for every KS2 and KS4 learner who needs additional support with maths.

Skye, the spoken AI tutor, adapts to the pace of each individual to provide personalised maths help that closes learning gaps and accelerates maths progress. Every learner begins with a skill check-in question to assess baseline knowledge, determine the lesson pathway and the level of support Skye needs to provide. Learners finish with a skill check-out question to assess the progress made in each lesson.

A recent study by Educate Ventures Research found that pupils receiving tutoring from Skye improved their accuracy from 34% to 92% within a session.

Find out more about the best AI tutors and 5 use cases for how schools like yours are using AI tutoring.

Discover how AI tutor, Skye, can improve your students’ confidence and maths results.

Get in touch to arrange a free AI maths tutoring session.

Try a free sessionAI in schools: 10 essential takeaways and AI actions for leaders

- Create an AI policy, outlook and ethos.

- Never upload pupils’ work or photos to any AI tool without explicit consent.

- Keep all personal data and school information out of chatbots and free AI platforms.

- Use only school-approved AI tools for school business, not personal accounts or devices.

- Check every AI tool for bias, data security, and suitability before you adopt it.

- Update your AI-related policies and permissions regularly – review them every month if needed.

- Make your AI decisions, policies, and rationale clear to staff, pupils, and parents.

- Train staff and pupils on AI risks and safe, ethical use. Keep records of this training.

- Actively gather feedback and monitor the impact of AI on learning, wellbeing, and safety.

- Keep a written record of all decisions, reviews, and updates related to AI.

A new 2025 Ofsted inspection framework rolls out in November, potentially with clearer AI expectations.

New Ofsted Framework 2025: Toolkit Checklist

A practical audit aligned with the Ofsted toolkit guidance on school inspections under the new framework for 2025. Includes an actionable checklist.

Download Free Now!Ofsted’s approach to AI in education

‘The biggest risk [with AI] is doing nothing’.

This direct quote from the recent early adopters report demonstrates just how fuzzy the issue is for school leaders:

The use of AI is not a stand-alone part of inspections and regulation practice, and inspectors do not directly evaluate the use of AI, nor any specific AI tools.

However, inspectors can consider how AI is used across the provider and its impact on the outcomes and experiences of pupils and learners. They should expect that pupils or staff members may be using AI in connection with the education or care they receive or provide (for example, to assist pupils in completing homework).

While there is no specific expectation that schools and FE colleges will use AI, the government is keen they adopt and embrace AI as set out in its AI opportunities plan.

It’s clear that the movement is towards an expected use, but it is not yet mandatory. Look closely, and you can see some key themes that translate practically.

Key AI themes

- Theme: ‘Inspectors do not directly evaluate the use of AI, nor any specific AI tools’

Translation: There’s no specific judgement or requirement to use any particular tool or service. - Theme: ‘Consider how AI is used across the provider’

Translation: You need a watertight AI policy. - Theme: ‘Its impact on the outcomes…of pupils and learners’

Translation: Causal measurability, attainment, progress metrics. - Theme: ‘Its impact on the …experience of pupils and learner’

Translation: Wellbeing, motivation, character development, safeguarding, behaviour, curriculum…. - Theme: ‘They should expect that … staff members may be using AI in connection with the education …. provide’

Translation: An expectation that AI will be used by staff for pedagogical purposes within the classroom in student-facing and teacher-facing ways. - Theme: They should expect that … staff members may be using AI in connection with the education …they receive…

Translation: Staff need AI literacy training. - Theme: They should expect that pupils … may be using AI in connection with the education or care they receive … (for example, to help pupils complete homework)

Translation: It’s expected that students will be using student-facing AI, as a first call for homework. - Theme: ‘The government is keen that they adopt and embrace AI as set out in its AI Opportunities Plan’

Translation: You’re going to need to use it and love it.

But where does that leave you and your school?

You are currently in limbo; there are no specific metrics to assess use of AI in the classroom, impact or implementation. If you choose to use it (not yet a formal requirement), you need to know why you’re using it and how effective it is for your learners.

AI LITERACY TRAINING FOR SCHOOL LEADERS

For a step by step plan to embed AI in your school ethically and responsibly, Third Space Learning and Laura Knight have partnered to create this free AI literacy course for school leaders.

How do you evidence AI ahead of inspection?

Now you know the direction of travel, what does the recently revised School Inspection Toolkit say about these aspects, and how are they graded?

The School Inspection Toolkit says nothing. Nadda. Zilch.

But, you can infer it is likely to fall under wider aspects, even if not mentioned specifically.

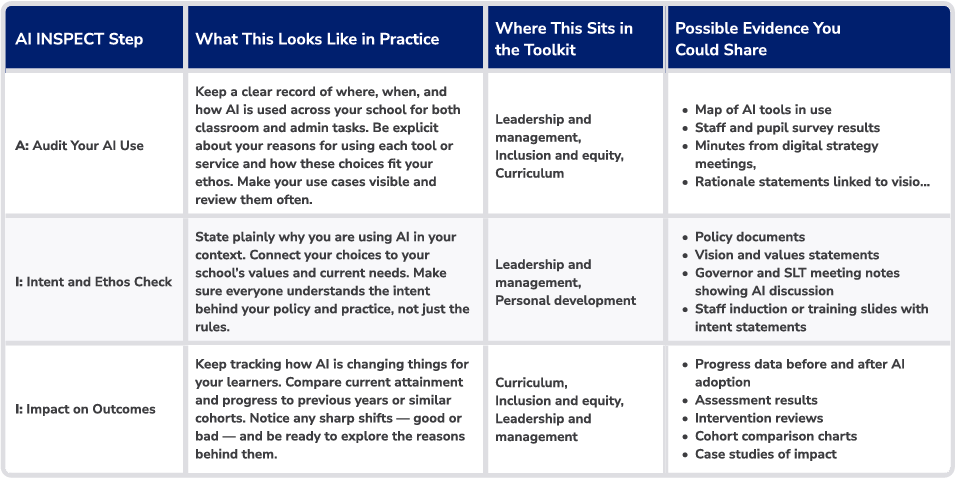

To help you navigate AI in schools and Ofsted, I’ve created a useful list of AI action and evidence points called The AI INSPECT Self-Audit, reflecting current Ofsted guidance.

Safeguarding and risk assessment

Just like phones and social media, anything technology-related has huge safeguarding implications, and anything involving safeguarding involves risk mitigation.

Risk made it into the title of the last Ofsted coms (early adopters). The directive here is clear: the biggest risk is doing nothing. No more AI ostriches.

So, in true teaching style, let’s borrow and infer from the examples of good practice contained within this report:

Commonly, the leaders we spoke to were clear about the risks of AI around bias, personal data, misinformation and safety. They had different mechanisms and procedures to address these. Some had a separate AI policy, while others had added AI to relevant existing policies, including those for safeguarding, data protection, staff conduct, and teaching and learning. The pace of change meant that many leaders were updating their AI policies as often as monthly. Importantly, these leaders encouraged regular and open discussion about AI between staff and with pupils to mitigate some of the risks associated with AI. This included developing their curriculum to teach pupils about the advantages and disadvantages of AI and how to use it safely.

Implications for your practice:

- Name the risks: bias, data, misinformation, and safety.

- Choose your policy route: stand-alone AI policy or update what you have.

- Review policies often (monthly if needed).

- Talk AI openly, with staff, pupils and parents.

- Train staff, share what you learn, keep the dialogue going.

- Build AI safety and critical thinking into your curriculum.

- Keep records to show your thinking and progress.

Let’s expand on point 1: Name the risks: bias, data, misinformation, safety.

A further concern is the risk that AI may perpetuate or even amplify existing biases. AI systems rely on algorithms trained on historical data, which may reflect stereotypical or outdated attitudes. Education providers need strong governance mechanisms to reassure parents, staff and pupils that AI tools have been evaluated for bias, data security and suitability for different demographics, before they are adopted in practice.

Tips for your AI practice:

- Check AI tools for bias before using them and consider where your AI’s data and training come from.

- Ensure you have strong governance over any AI adoption.

- Reassure parents, staff, and pupils that you’ve checked for bias, data security, and suitability.

- Only adopt AI tools after they pass your evaluation for equity in education and safety.

- Keep clear records of your review and decision-making process.

To clarify on point 4, it states:

Schools and colleges also need to be transparent about their use of AI. This includes informing parents, particularly if pupils are using AI.

The DfE’s current position is that schools and colleges should establish clear accountability and transparency around the AI systems they use:

- Be open about all AI use in your school or college.

- Inform parents whenever pupils use AI tools.

- Make your AI policies, practices, and decisions visible to your community.

- Set out clear lines of accountability for who oversees AI in your setting.

- Follow DfE guidance by prioritising transparency and clarity at every stage.

As with all safeguarding, you’ll notice a common theme of record-keeping. One of the most shocking updates of late is the news that the updated KSCiE document does not contain any specific mention of AI, instead referring leaders to the “plan technology for your school service.” The advice: review online safety and risk annually using tools like the 360 safe website or LGfL audit.

Data protection and compliance

As with safeguarding, school leaders are instructed to follow GDPR when it comes to data protection and compliance:

The literature indicates that adopting AI into educational systems involves navigating a range of barriers and significant challenges. For instance, the use of AI by staff and pupils needs robust safeguarding and governance frameworks. This is because generative AI systems collect and analyse large data sets, and there is significant potential for breaches of data privacy. Guidelines from the UK’s Information Commissioner’s Office stress the importance of ensuring that AI tools fully comply with data protection regulations such as the General Data Protection Regulation (GDPR).

Accordingly, these are immediate things you should check straight away. Ensure your teachers aren’t:

- Uploading children’s work without permission (it’s their intellectual property).

- Uploading photos of them or their class into chatbots or tools to make colour in sheets (yes, this is a thing on Instagram).

- Allowing any personal data or information to be used in chatbots without permission.

- Using non-school-approved AI chatbots at home or on personal devices for school-related work.

Free versions of chatbots will often train on the data you enter in the inputs. So details of your children could then ‘feed the model’ without their consent.

Current Ofsted framework and AI integration

Historically, Ofsted documentation has shied away from the nitty-gritty of technology, choosing to focus more on the justification and impact of how you use it within your school.

Let’s look at how things have unravelled in the last few years:

- September 2019 Education Inspection Framework (EIF): The launch of the framework focusing on curriculum, behaviour, leadership, and safeguarding, with no specific reference to AI or digital technology.

- April 2024 Ofsted’s Approach to Artificial Intelligence (AI): Ofsted’s first formal statement outlining their approach to AI for both internal processes and expectations for schools.

- December 2024 Terms of Reference for Ofsted AI Review: This announcement was the scope-setting for Ofsted’s commissioned review into the use of AI in education.

- June 2025 AI in Schools and Further Education: Findings from Early Adopters: Ofsted’s most recent research report documents the experiences and challenges of 21 schools and FE colleges using AI.

- June 2025 Generative AI in Education: Support Materials (DfE): Department for Education guidance referenced by Ofsted, providing advice for schools on the use of generative AI.

- February 2025 – 11th June Consultation on New Education Inspection Framework and Report Cards: I’m pretty sure everyone reading this is aware of Ofsted’s sector-wide consultation proposing new multi-area report cards to replace single-word judgments. This does, however, include references to technology policy and leadership. Updated in June with a postponed response timeline.

- Forthcoming, late 2025, rollout November 2025 / ITE Jan 2026: New Education Inspection Framework and Report Card Model . Ofsted’s planned update with a one‑term notice period post‑consultation response; full inspections scheduled from November 2025 (ITE from January 2026).

So, what’s the narrative? Ironically, Ofsted’s approach has shifted from tech being invisible to “the biggest risk is doing nothing”.

Inspection methodology for AI-enhanced schools

While Ofsted doesn’t have specific AI metrics yet, inspectors will likely examine AI use through existing lenses: curriculum impact, leadership decisions, and safeguarding effectiveness. They’ll want to see evidence that any AI tools genuinely improve learning outcomes rather than just creating digital busy work.

The future of AI in Ofsted inspections

The direction of movement is clear: the use of AI in education is expected, and it has now become another matter of governance and leadership rather than technical know-how. Is this from a need to improve inspectors’ digital literacy before they can judge schools? Very likely.

Looking at their recent reports, there are clear lines of inquiry that could eventually feature more explicitly in the inspection toolkits, such as:

- Does your use of AI help pupils learn more or make progress?

- Can you provide evidence that AI is improving outcomes?

- How are you checking for errors or “hallucinations” in AI-generated answers?

- Have you considered safeguarding risks with AI? Is this documented?

- Are you weighing benefits against risks for pupils and staff?

- Can you provide evidence for this?

- How do you make sure AI supports, not undermines, teachers’ professional judgement and identity?

- Does your AI align with your pedagogical values and ethos?

- How can you demonstrate this?

Something to keep your eye on for the future.

How schools could build this evidence

Schools implementing AI tools should consider documenting: detailed usage logs showing which pupils accessed support and when, examples of how AI identifies learning gaps or misconceptions, evidence of teacher oversight and professional judgement in AI-supported lessons, and clear policies showing how AI use aligns with school values and safeguarding requirements.

This approach gives school leaders practical frameworks they can adapt to whatever AI tools they might use, without making specific claims about particular products or outcomes.

After this whistle-stop tour of Ofsted’s recent involvement with AI, there are two takeaways for you:

- Use the AI Inspect Framework and 10 Essential Actions for leaders.

- Learn to love it, or else provide robust evidence why you don’t!

Key actions needed now:

- Develop or update your AI policy

- Ensure any AI use has a clear educational justification

- Check your data protection and safeguarding measures

- Keep detailed records of decisions and impacts

- Prepare to demonstrate how AI supports (not replaces) teaching

Ofsted’s approach to AI is impact first. Inspectors do not grade tools or use, but they look for clear policies, strong safeguarding and evidence that AI improves pupil outcomes and experiences, with a clear warning that the biggest risk is doing nothing.

There is no single national AI policy for schools in the UK. You need a school policy that covers consent, GDPR, bias checks, approved tools, transparency with parents, staff and pupil training, impact monitoring and rigorous record keeping amongst others.

DfE guidance supports careful adoption where AI improves learning, with accountability and transparency for any systems used. The 2025 Generative AI Support Materials and the AI Opportunities Plan emphasise governance, risk assessment, GDPR compliance, curriculum integration, staff training and clear parent communication. The Chartered College and DfE have created their own training materials called the ‘Safe and effective use of AI in education‘ that is certified upon completion.

References

Department for Education (DfE) (2025) Generative AI in Education: Support Materials. Available at: https://www.gov.uk/government/collections/using-ai-in-education-settings-support-materials (Accessed: 5 August 2025).

Department for Education (DfE) (2025) AI Opportunities Plan. Available at: https://www.gov.uk/government/publications/ai-opportunities-in-education (Accessed: 5 August 2025).

Department for Education (DfE) (2024) Keeping Children Safe in Education (KCSIE). Available at: https://www.gov.uk/government/publications/keeping-children-safe-in-education–2 (Accessed: 5 August 2025).

Hedlund, V. (2025) A.I. INSPECT Self-Audit. GenEdLabs.ai. Available at: https://www.genedlabs.ai/ (Accessed: 5 August 2025).

LGfL (n.d.) Online Safety Audit Tool. Available at: https://safeguarding.lgfl.net/audit (Accessed: 5 August 2025).

National Education Network (n.d.) 360 Degree Safe Self-Review Tool. Available at: https://360safe.org.uk/ (Accessed: 5 August 2025).

Ofsted (2019) Education Inspection Framework (EIF). Available at: https://www.gov.uk/government/publications/education-inspection-framework (Accessed: 5 August 2025).

Ofsted (2024) Ofsted’s Approach to Artificial Intelligence (AI). Available at: https://www.gov.uk/government/publications/ofsteds-approach-to-ai/ofsteds-approach-to-artificial-intelligence-ai (Accessed: 5 August 2025).

Ofsted (2024) Terms of Reference for Ofsted’s AI Review. Available at: https://www.gov.uk/government/publications/ofsted-research-on-artificial-intelligence-in-education-terms-of-reference (Accessed: 5 August 2025).

Ofsted (2025) AI in Schools and Further Education: Findings from Early Adopters. Available at: https://www.gov.uk/government/publications/ai-in-schools-and-further-education-findings-from-early-adopters (Accessed: 5 August 2025).

Ofsted (2025) Consultation on Education Inspection Framework and Report Cards. Available at: https://www.gov.uk/government/consultations/improving-the-way-ofsted-inspects-education/improving-the-way-ofsted-inspects-education-consultation-document#proposal-1-report-cards (Accessed: 5 August 2025).

Information Commissioner’s Office (ICO) (2024) Artificial Intelligence (AI) Guidance. Available at: https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/ (Accessed: 5 August 2025).

DO YOU HAVE STUDENTS WHO NEED MORE SUPPORT IN MATHS?

Skye – our AI maths tutor built by teachers – gives students personalised one-to-one lessons that address learning gaps and build confidence.

Since 2013 we’ve taught over 2 million hours of maths lessons to more than 170,000 students to help them become fluent, able mathematicians.

Explore our AI maths tutoring or find out about the AI tutor for your school.