Using AI In Schools: What The DFE Guidance Means For You

The Department for Education is publishing increasing amounts of guidance for the use of AI in schools. All support is welcome but as school leaders and teachers you will all be at different stages of awareness and understanding of the use and potentinal misuse of AI. After all, it’s only one of multiple priorities you’re grappling with.

In this article, we explore how best to approach the guidance available to schools; thinking about implementing AI safely and responsibly, supporting teachers, boosting student performance and opportunity, and managing ethical considerations.

For many schools, AI has quickly become a leadership issue. Senior leaders are being asked for AI policies, implementation plans, and risk assessments; often before they have had the chance to build confidence in the subject themselves.

This comes on top of an already demanding strategic agenda, but without the benefit of widespread training. In secondary schools, this work is most likely to sit with assistant heads responsible for teaching and learning, digital strategy, or CPD. It may also fall to IT managers, heads of Computer Science, digital learning leads, safeguarding or online safety coordinators, or others with responsibility for innovation, staff development, or curriculum design.

Wherever your expertise and experience with AI currently sit, this guidance presents a valuable opportunity to engage with the theme in a timely and structured way.

Let’s start with the expanded guidance on artificial intelligence, published in June 2025: Generative AI in Education Settings together with the support materials.

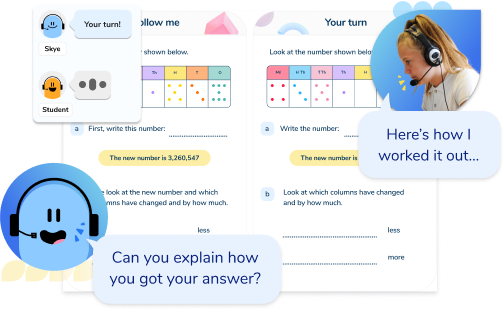

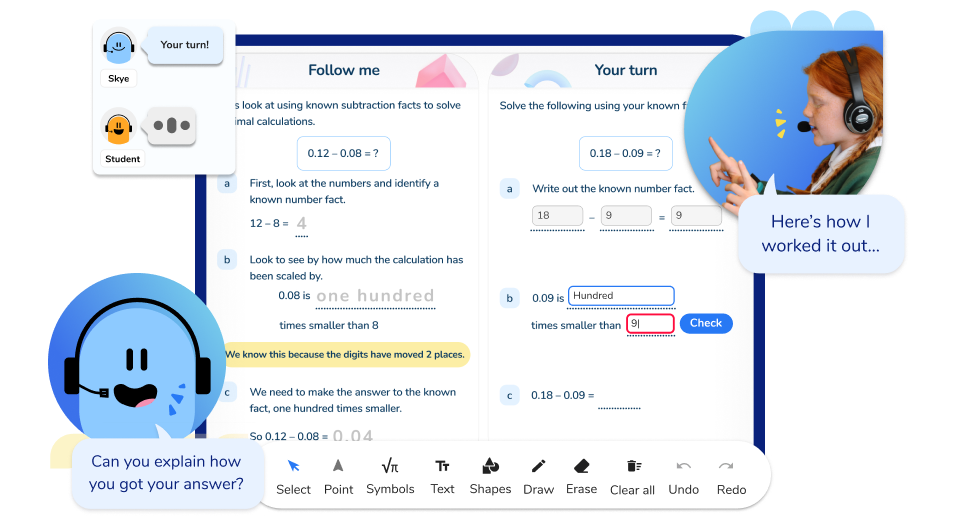

Discover how AI tutor, Skye, can improve your students’ confidence and maths results.

Get in touch to arrange a free AI maths tutoring session.

Try a free sessionGenerative AI in Education Settings

Using AI in education settings: support materials, helps school and college leaders and teachers understand the new possibilities of Generative AI, providing evidence-informed guidance, and clear, practical support for navigating the risks while harnessing the opportunities this new technology provides.

Why the DfE’s artificial intelligence guidance matters now

AI use is no longer a distant concept for the education sector, with over half of young people in the UK having already used generative AI tools, and many schools exploring how to implement AI safely and effectively.

The proliferation of AI tools in the market is creating both opportunities and challenges for school leaders, especially around data protection, safeguarding, academic integrity, and ethical considerations.

In addition, schools are under increasing pressure from challenges around finance, recruitment and retention, workload, standards and outcomes, and widening equity gaps. The sector has been crying out for guidance on how best to approach generative AI, and this is a very positive starting point.

AI in schools is already widespread among teachers, with nearly half of teachers reporting using Generative AI in 2024 for a range of tasks to help reduce workload, including

- Content generation

- Building model answers

- Supporting lesson planning

What is concerning, however, is that this is often happening in schools that have not formally implemented AI use, and may not have strong AI literacy or a policy in place.

This new guidance helps us all be clear: this is no longer a niche experiment, and it is time to build artificial intelligence into our digital strategies.

There is, of course, a risk of doing nothing, as Ofsted raised as the biggest concern with AI. Failing to engage with AI now may leave schools behind as the technology becomes embedded in the wider education sector. Without a clear strategy, there is a danger of inconsistent practice, increased workload, and exposure to legal and ethical risks, including being out of alignment with the latest Keeping Children Safe in Education guidance.

The DfE’s guidance offers a sensible, evidence-informed starting point for schools and colleges to implement AI safely, ethically, and effectively.

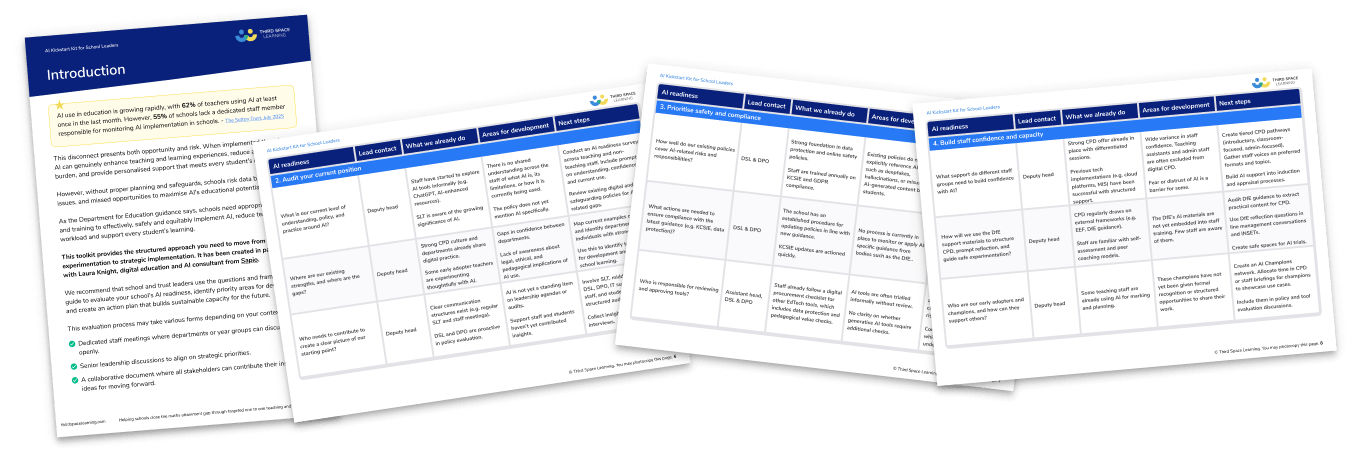

AI Kickstart Kit for School Leaders

A practical planning tool aligned with the DfE guidance on artificial intelligence. Covers the essential questions you need to ask when implementing new AI tools in your school and includes a completed and editable AI integration planning tool.

Download Free Now!What the DfE has published for the education sector: Content and collaboration

The DfE’s resource collection, Using AI in education settings: support materials, is the result of collaboration between school and college leaders, academic researchers, and experts in educational technology.

It was delivered in partnership with the Chiltern Learning Trust and the Chartered College of Teaching. Their collaboration makes sure that the artificial intelligence resources are practical, evidence-informed, and directly relevant to the needs of schools and colleges.

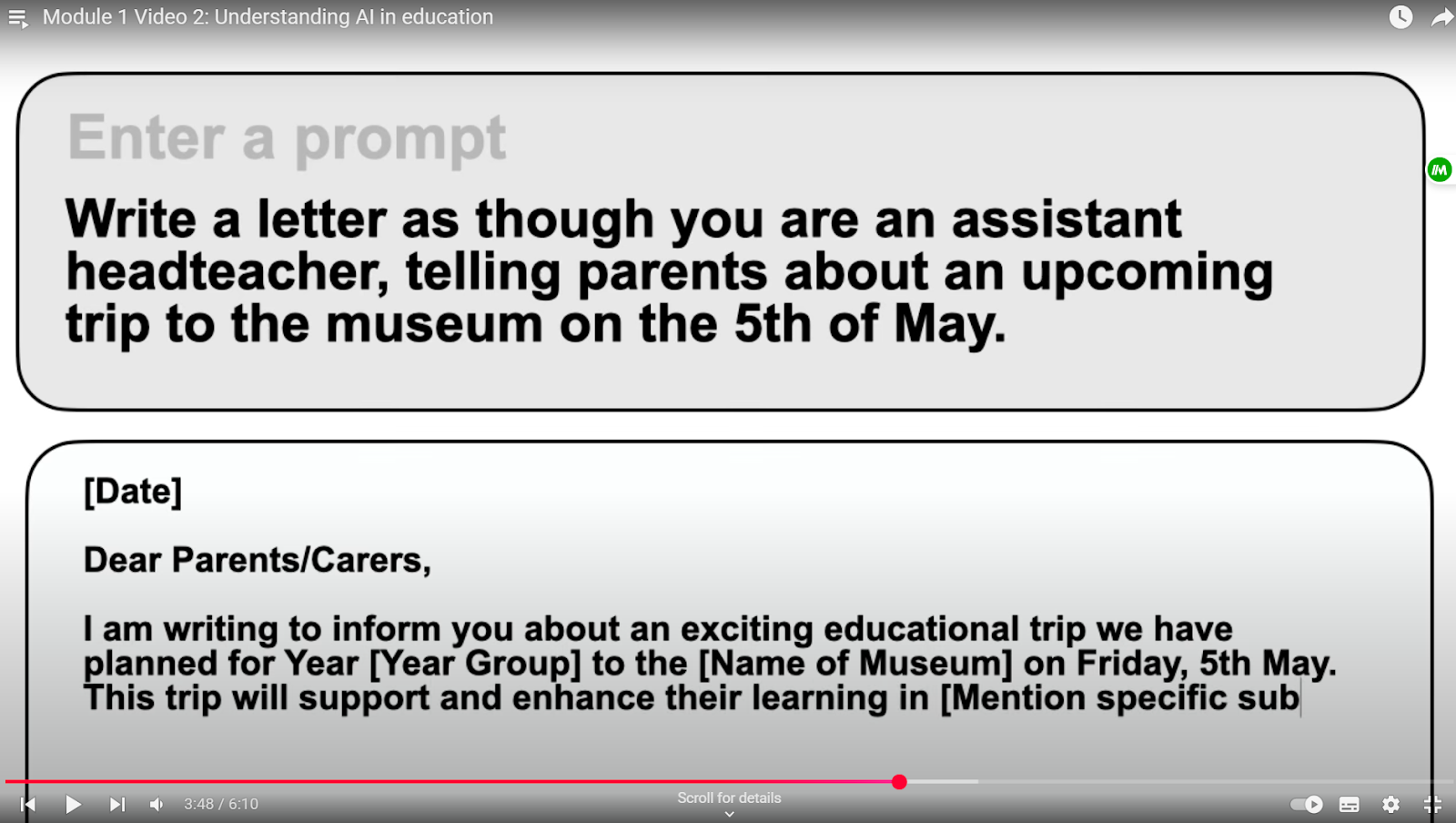

The DfE materials for teachers include:

- Ready-to-use slides for staff training and group discussion

- Video presentations with complete transcripts

- Structured modules in short, manageable chunks

- Clear guidance on safety, ethics, and data protection

- Knowledge consolidation activities, including reflection activities and questions

- Case studies and examples

- Do’s and Don’ts – including pitfalls to avoid

- Audit and planning tools

The guidance is grounded in the realities of schools and colleges, with a focus on supporting brilliant teachers and support staff to make informed decisions about AI systems and tools.

Alongside the teacher support, there is also a separate, powerful toolkit of materials for school and college leaders, which helps explore the strategic decision-making needed to integrate AI in education effectively and safely.

This artificial intelligence integration toolkit includes content on the opportunities and strategic benefits of generative AI, safety and risk management, embedding AI in your wider digital strategy, and some great thinking about policy, governance and compliance.

This all comes with practical recommendations about

- Professional development

- Curriculum and skills

- Stakeholder engagement

- Implementation planning

I was delighted to play a part in the powerful team who created these materials, which included colleagues from the Department for Education, The Chartered College of Teaching, the Chiltern Learning Trust, and highly regarded experts in digital education, leadership and AI, including Trudi Barrow, Bukky Yusuf, Prof Miles Berry, Al Kingsley, and Dr Neelam Parmar. This group of experts is deeply committed to building workable and ethical practice in school, and grounding it in the reality of the present, not an idealistic future.

5 key themes from the DfE AI in Education Guidance

1. Safety and being responsible

“Safety First: AI with Safeguards, Not Shortcuts”

The guidance is unequivocal: safety is the non-negotiable foundation for any use of artificial intelligence in schools and colleges. This means robust data protection, clear boundaries around intellectual property, and a culture of vigilance around new risks.

The latest Keeping Children Safe in Education (KCSIE) updates now explicitly reference AI, requiring leaders to ensure that filtering, monitoring, and safeguarding policies address the unique challenges posed by generative AI tools. The message is clear: schools must conduct risk assessments, use only approved enterprise-grade tools, and communicate transparently with staff, students, and parents about how artificial intelligence is used and protected.

2. Ethics and fairness

“Fairness by Design: AI That Serves Every Learner”

Ethical leadership is at the heart of the DfE’s approach. The guidance calls for a critical stance on bias, fairness, and inclusion, recognising that AI systems can amplify existing inequalities if not carefully managed.

Leaders are urged to be proactive here: we must check for bias in AI outputs, champion academic integrity, and ensure that artificial intelligence is used to support, not undermine, inclusion and accessibility. This means adapting AI-generated content for diverse learning needs and being transparent about the limitations and risks of these technologies.

3. Workload, timesaving, efficiency, and planning

“Time Well Spent: AI That Frees Teachers to Teach”

One of the most immediate benefits of AI is its potential to reduce teacher workload and streamline routine and administrative tasks. The guidance highlights how AI can support lesson planning, resource creation, data analysis, and efficiency, with the goal of freeing teachers and leaders to focus on what matters most: inspiring teaching and supporting student learning.

However, the guidance is clear that efficiency must never come at the expense of quality, safety, or professional judgment.

Piloting AI tools, gathering feedback, and iterating practice are all recommended to ensure that using AI in classrooms delivers genuine value, and that we don’t end up using tools just for the sake of using them. Here, Deputy Headteacher and creator AI Teacher Prompts Neil Almond reveals how to enhance your maths lesson planning and resource created with ChatGPT.

4. Professional judgement, the human in the loop, and oversight

“Human at the Helm: AI as Assistant, Not Authority”

The DfE guidance is very clear: AI must always operate under human oversight. Professional judgement, critical thinking, and contextual adaptation are essential.

AI is a tool to inform decisions, not to make them. Leaders are encouraged to embed critical evaluation into daily practice, maintain oversight in sensitive areas such as assessment and safeguarding, and resist the temptation to outsource thinking to algorithms.

The message is clear: technology should amplify, not replace, human intelligence and ethical responsibility.

5. Staff confidence, collaboration, learning, and CPD

“Confidence Through Collaboration: Learning Together”

Building staff confidence is essential for safe and effective AI adoption. The guidance recommends integrating the teacher toolkit into CPD, creating a culture of exploration and peer learning, and providing role-specific training.

Leaders are encouraged to act as convenors and connectors here: establish working parties, share experiences, and support staff at all career stages.

Ongoing, context-specific professional development (not one-off training) is key to embedding AI safely and sustainably. Collaboration with governors, parents, and students is also vital to ensure a whole-community approach.

Taking the lead on AI: a 10-step blueprint

Here’s how to get started

1. Set a clear vision and purpose

- Articulate your school’s vision for AI and digital technology.

Consult widely with staff, governors, and students to define the purpose of AI in your context. Ensure your vision aligns with your school development plan and educational values. - Communicate this vision clearly.

Use staff briefings, newsletters, and parent communications to explain why you are engaging with AI and what you hope to achieve. - Establish a steering group or working party.

Nominate a small team to lead on implementation, gathering evidence and feedback, and support the roll-out of your AI approach. This team could include digital leads, senior leaders, classroom teachers, and IT or safeguarding staff.

2. Audit your current position

Use the Leadership Toolkit’s audit tools

Reflect on your current practice across strategy, policy, staff training, curriculum, student use, and procurement.

Identify strengths and gaps

Involve your leadership team and IT/data protection leads to ensure a comprehensive understanding of your starting point.

3. Prioritise safety, data protection, and safeguarding

Review and update policies

Ensure your safeguarding, data protection, and acceptable use policies explicitly address AI, referencing the latest KCSIE guidance and DfE product safety expectations.

Conduct risk assessments for all AI tools

Only approve AI technology and AI tools that meet enterprise standards, protect data and intellectual property, and have robust filtering and monitoring in place.

4. Build staff confidence and capacity

Integrate the teacher materials into your CPD programme

Ensure all staff complete the four modules, with additional support for trainees, ECTs, and those in specialist roles.

Encourage a culture of exploration and peer learning.

Establish working parties or AI steering groups to trial AI tools, share experiences and detailed feedback, and reflect on practice.

Identify early adopters and AI champions

Ask team members to model confident, ethical, and effective use of AI in the classroom.

Provide role-specific training

Tailor CPD to the needs of teachers, support staff, and leaders, focusing on safe, effective, and ethical use.

5. Trial and evaluate AI Tools strategically

Start small and purposeful

Pilot AI tools in targeted areas such as planning lessons, resource creation, or administrative tasks to reduce workload. Use the audit and planning templates to structure your approach. This approach can also be applied to AI tutoring such as Third Space Learning’s spoken AI maths tutor, Skye. Remember to pilot even the best AI tutors and tools.

Distinguish between generative AI tools and AI-powered features

Generative AI tools include large language models for writing support, whereas AI-powered features embedded within existing products include adaptive platforms, automated marking, or AI-driven tutoring tools. This distinction will help you make more informed decisions about value, safety, and pedagogy.

Gather evidence and detailed feedback

Monitor impact on teacher workload, teaching quality, and student learning and outcomes. Adjust your approach based on staff and student feedback.

You can read more on how schools are using AI tutoring, how AI maths tutor Skye impacts student learning and outcomes in EdTech Ninja Jodie Lopez’s AI tutoring review of a Year 5 and Year 10 session, and Neil Almond’s review of an AI tutoring SATs revision lesson.

Or you can find out more about the transition from traditional to AI tutoring and why AI tutors will never replace teachers.

6. Engage middle leaders, governors, and the wider community

Involve middle leaders and governors in strategic planning

Invite them to participate in working groups, policy development, and evaluation of AI initiatives.

Communicate regularly with parents and carers

Be transparent about how AI technology and tools are being used, the safeguards in place, and the benefits and risks for students.

Consult students and seek their perspectives

Use surveys, focus groups, or digital leaders to ensure student voice informs your approach.

7. Co-construct and update policy

Develop or update your AI policy collaboratively

Involve staff, governors, and, where appropriate, students and parents in drafting and reviewing AI policy documents.

Align with statutory guidance and best practice

Reference DfE policy papers, product safety frameworks, and digital standards.

8. Embed AI in your digital strategy

- Integrate AI into your wider digital and school development plans: ensure alignment with your vision, infrastructure, goals for educational outcomes, and improvement priorities.

- Use EdTech frameworks (e.g., SAMR, TPACK) to guide implementation: support staff to reflect on how AI enhances, rather than replaces, effective teaching and learning.

9. Leverage local networks and sector support

- Join or form local networks: collaborate with other schools, trusts, or colleges to share resources, case studies, and lessons learned.

- Engage with national initiatives: make use of resources from the Chartered College of Teaching, Oak National Academy, and the DfE’s ongoing support.

10. Plan for sustainable implementation

Follow a structured implementation process

Use the EEF’s Explore–Prepare–Deliver–Sustain model to guide your journey, ensuring ongoing review and adaptation.

Celebrate progress and share success

Recognise staff efforts, share good practice, and maintain momentum through regular communication and reflection.

AI LITERACY TRAINING FOR SCHOOL LEADERS

For a step by step plan to embed AI in your school ethically and responsibly, Third Space Learning and Laura Knight have partnered to create this free AI literacy course for school leaders.

Limitations of the guidance, and what to watch for next in artificial intelligence for educational institutions

The Department for Education’s teacher and leader toolkits represent a positive step forward in supporting schools and colleges to use artificial intelligence safely and effectively.

However, important limitations remain. The guidance, while comprehensive in its foundational approach, offers only a limited range of real-world case studies and practical examples, which may not fully address the diversity of school contexts or the breadth of emerging AI applications.

Also, its static nature means that advice on tool selection, data protection, and best practice risks becoming outdated as technology evolves rapidly.

The materials provide a strong starting point for safeguarding and data protection, but the technical complexity of AI systems (particularly around data flows, model training, and third-party integrations) can be complex to understand and may outpace the expertise available in many settings.

Two important points:

- The guidance does not yet offer detailed protocols for student use.

- Nor does it fully address the digital divide, equity, or the needs of vulnerable learners.

We should keep banging the drum on equity in education and inclusion, with targeted strategies and funding advice to bridge the digital divide and support SEND and vulnerable learners. There is so much potential for the transformative power of Generative AI in this context.

Finally, let’s also consider the importance of building robust mechanisms for evaluating impact, sharing feedback, and establishing governance structures. These are only in early stages, leaving schools to navigate some of these challenges independently.

Do check out the Chartered College’s ongoing work in this space, as well as the Good Future Foundation’s free AI Quality Mark, which will help with the review and evaluation of your school’s progress.

DfE guidance conclusions: A sensible place to begin

The Department for Education’s AI resources offer a robust and practical foundation for schools and colleges navigating the opportunities and challenges of artificial intelligence in education. Whether you are a school or college leader just beginning to explore AI in schools, or already integrating AItools into your digital strategy, these materials provide clear, evidence-informed guidance that places safety, ethics, and educational outcomes at the centre.

The toolkit encourages leaders, teachers, and support staff to move forward with confidence and care: learning fast, acting thoughtfully, and always keeping professional judgement and human intelligence at the heart of decision-making.

Engaging with these resources is not about racing to implement AI such as intelligent tutoring systems, or adopting the latest educational technology for its own sake. It is about building a culture of curiosity, critical thinking, and collaboration, where AI use is purposeful and aligned with your school’s values.

The guidance is designed to support you at every stage of your AI in education journey, helping you to enhance teaching, streamline administrative tasks, and provide students with tailored support.

By starting here, you are investing in the long-term wellbeing and success of your pupils, staff, and wider learning environment; even in the face of recruitment and retention challenges, huge financial pressures, and the ever-present digital divide.

Resources:

- Department for Education Resource Collection: Using AI in education settings: support materials – GOV.UK

- The Good Future Foundation AI Quality Mark (free)- AI Quality Mark | Good Future Foundation

- The Chartered College of Teaching AI Training Programme (free) Safe and effective use of AI in education – Chartered College of Teaching

- A Little Guide for Teachers: Generative AI in the Classroom by Laura Knight

- DfE Product Safety Framework Generative AI: product safety expectations – GOV.UK

DfE Policy Paper Generative artificial intelligence (AI) in education – GOV.UK